Artificial intelligence is no longer a research experiment. In 2026, it is infrastructure. From enterprise copilots to verticalized LLM deployments, companies are no longer asking if they should train or fine-tune models: they are asking how to do it faster, more predictably, and without burning capital on inefficient hardware.

The answer increasingly points toward purpose-built GPU dedicated servers.

This guide breaks down what matters, what doesn’t, and how to think about AI training servers from both a technical and performance-efficiency standpoint.

Why GPU Dedicated Servers Are Essential for AI Training in 2026

Growth of LLMs & Generative AI

The rise of models like GPT-4 and open-source frameworks such as LLaMA has dramatically increased compute requirements. Training runs that once fit on a single GPU now demand multi-GPU parallelization, high-throughput storage, and massive memory bandwidth.

Fine-tuning, RAG pipelines, diffusion models, and video generation workloads all compound the need for dedicated GPU environments. Shared cloud GPUs often introduce performance variability that disrupts training timelines.

For organizations building revenue-generating AI applications, that unpredictability becomes a financial issue.

Why CPU-Only Servers Fail for Modern AI

Traditional CPU servers struggle with matrix-heavy workloads that define deep learning. AI training depends on massive parallel floating-point operations. CPUs are optimized for sequential tasks and general compute, not thousands of simultaneous tensor operations.

A CPU-only system may technically “work,” but training times increase exponentially, energy costs spike, and experimentation slows. In competitive AI environments, slower iteration cycles directly reduce innovation velocity. GPU servers for machine learning solve this by parallelizing compute at scale.

GPU Memory & Parallel Processing Advantages

Modern AI models are memory-hungry. Transformer architectures require significant VRAM to hold weights, gradients, and intermediate tensors during training.

High-performance GPUs provide: massive parallel CUDA cores, high-bandwidth memory (HBM on enterprise GPUs), tensor core acceleration and optimized AI instruction sets. This is why AI training servers built around RTX 4090 dedicated servers or A100 bare metal servers consistently outperform general-purpose compute environments.

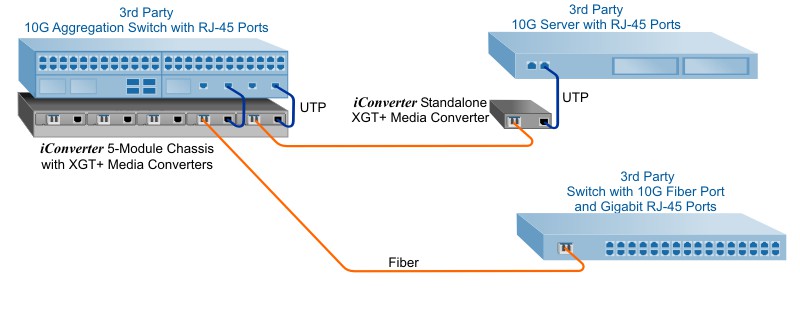

For large-scale training jobs, pairing high-VRAM GPUs with 10 Gbps port options and 100TB bandwidth ensures that dataset ingestion and distributed cluster sync do not become bottlenecks.

Key Features to Look for in an AI GPU Dedicated Server

Selecting the best GPU for AI training is not about brand preference, it’s about matching architecture to workload.

GPU Model (RTX 4090 vs A100 vs RTX 6000 Ada)

Consumer-prosumer GPUs like the NVIDIA GeForce RTX 4090 Dedicated Server delivers exceptional price-to-performance for fine-tuning, inference, and mid-sized LLM tr aining. With 24GB of VRAM and high CUDA core counts, they remain one of the most efficient AI server hardware options in 2026.

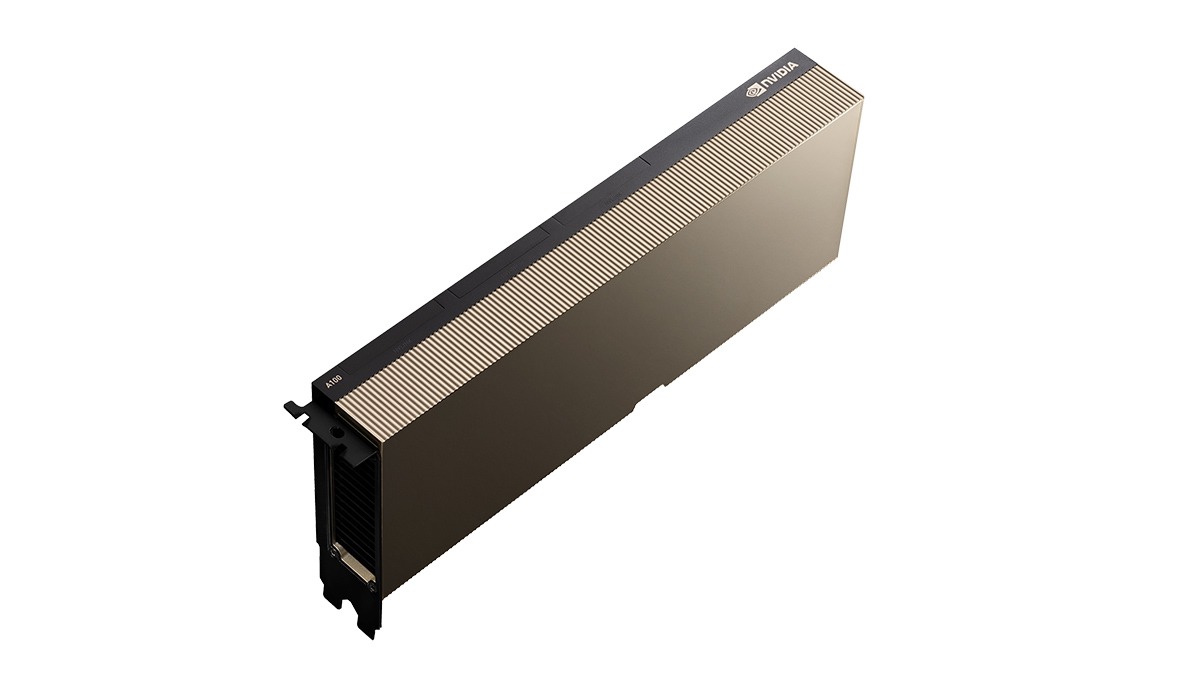

Enterprise-grade accelerators like the NVIDIA A100 (especially 80GB variants) are designed for large model training and multi-GPU distributed environments. They support NVLink, offer higher memory bandwidth, and excel in cluster deployments.

The NVIDIA RTX 6000 Ada Generation sits between these categories, offering larger VRAM footprints and professional stability for AI research labs and enterprise inference clusters.

The right choice depends on model size, concurrency needs, and scaling plans.

VRAM Capacity Requirements (24GB vs 48GB vs 80GB)

VRAM determines what model sizes you can train without aggressive sharding or offloading.

• 24GB (RTX 4090 class): Ideal for fine-tuning 7B–13B parameter models

• 48GB: Better for mid-sized LLMs and larger batch sizes

• 80GB (A100 class): Suitable for large model training and multi-node scaling

Underestimating VRAM leads to memory swapping and inefficient gradient checkpointing. Overestimating wastes capital. Right-sizing VRAM is one of the most important AI server hardware requirements decisions.

CPU & PCIe Lanes (Critical for Data Throughput)

Many overlook CPU architecture when selecting AI training servers.

High-core-count CPUs such as AMD EPYC or Ryzen platforms provide sufficient PCIe lanes to avoid GPU bandwidth throttling. Insufficient lanes can bottleneck multi-GPU systems, reducing overall training efficiency.

Balanced architecture offers adequate PCIe Gen4/Gen5 lanes, strong single-thread performance for orchestration and sufficient RAM for dataset preprocessing. Without this balance, even the best GPU for AI training can be underutilized.

NVMe Storage Speed

AI workloads constantly read and write massive datasets. Fast NVMe Gen4 or Gen5 drives reduce training latency during data loading.

Look for high IOPS NVMe, RAID configurations for redundancy and sufficient scratch disk capacity.

Storage speed directly impacts iteration time; especially in image, video, and multimodal training.

Network Bandwidth (10Gbps vs 1Gbps)

Network throughput becomes critical in distributed AI clusters.

A 1Gbps port may suffice for isolated training, but for; multi-node training, large dataset ingestion, remote backup sync and model deployment pipelines – 10 Gbps port options dramatically reduce bottlenecks. Paired with 100TB bandwidth allocations, teams can train and move data without artificial throttling.

Power & Cooling Redundancy

High-performance GPUs generate significant heat. AI training environments require redundant power feeds, data center-grade cooling and rack-level airflow management. Without infrastructure stability, thermal throttling can degrade GPU performance over long training cycles.

When to Choose Custom AI Clusters

Single-GPU servers are powerful. But scaling AI initiatives often requires custom AI clusters.

Cluster deployments enable distributed training, horizontal scaling model parallelism and high-availability inference.

Combining multiple RTX 4090 dedicated servers or A100 bare metal servers into coordinated clusters allows teams to scale model size and reduce training time significantly.

For enterprises building AI-native products, cluster-ready architecture is not a luxury, it is foundational.

Final Thoughts: Performance Predictability Wins

In 2026, AI infrastructure decisions are no longer just engineering choices. They are strategic commitments.

AI training servers must deliver:

• High VRAM

• Fast NVMe

• Sufficient PCIe lanes

• 10Gbps networking

• Scalable bandwidth

• Reliable power and cooling

Whether deploying RTX 4090 dedicated servers for cost-efficient fine-tuning or A100 bare metal servers for enterprise-grade LLM training, the key is architectural alignment with workload demands.

The best GPU for AI training is the one that balances performance, memory, scalability, and predictable throughput. And in AI, predictability is speed.

Speed is advantage.

Frequently Asked Questions About GPU Dedicated Servers for AI

How much VRAM do I need for AI training?

VRAM requirements are driven primarily by model size and batch configuration. For fine-tuning 7B–13B parameter models, 24GB of VRAM (such as what you get with an NVIDIA GeForce RTX 4090) is often sufficient when paired with mixed precision training and efficient memory handling.

As model sizes increase, memory headroom becomes critical. Larger models, higher batch sizes, and reduced reliance on gradient checkpointing typically require 48GB or 80GB of VRAM. Enterprise-grade GPUs like the NVIDIA A100 80GB variant allow teams to train without aggressive memory tradeoffs. In practical terms, VRAM defines your performance ceiling. Underprovisioning slows iteration; proper sizing unlocks speed.

Is RTX 4090 good for LLM training?

Within the right scope, absolutely.

The RTX 4090 has emerged as one of the most efficient GPUs for AI training servers in 2026. With 24GB of VRAM and strong tensor core performance, it performs exceptionally well for fine-tuning open-source LLMs, mid-sized training workloads, and inference-heavy pipelines.

It is not designed for massive multi-node enterprise model training, but for startups, applied AI teams, and internal R&D environments, RTX 4090 dedicated servers deliver impressive price-to-performance ratios.

Is A100 worth the cost?

That depends on scale and ambition.

The NVIDIA A100 was engineered specifically for large-scale AI and high-performance computing environments. With up to 80GB of high-bandwidth memory and support for NVLink in multi-GPU configurations, it excels in distributed training and large transformer architectures.

For organizations training substantial LLMs or running production-grade AI clusters, the faster training cycles and scalability often justify the premium. When iteration speed directly impacts revenue or competitive positioning, higher-end GPUs can pay for themselves quickly.

Can I run multiple GPUs in one server?

Yes, and for many workloads, it’s the preferred approach.

Multi-GPU systems allow distributed data parallelism and model parallelism within a single chassis. When supported by sufficient PCIe lanes, CPU resources, power delivery, and cooling, running multiple GPUs significantly reduces training time and increases throughput.

As models grow larger and training timelines tighten, multi-GPU servers or custom AI clusters become foundational rather than optional.

What is the difference between cloud GPU and dedicated GPU?

Cloud GPU instances offer convenience and short-term elasticity, but they often run within virtualized environments where performance can fluctuate due to shared infrastructure and network variability.

Dedicated GPU servers provide exclusive hardware access. That means consistent PCIe throughput, predictable NVMe performance, stable 10Gbps networking options, and high bandwidth allocations such as 100TB without unexpected throttling.

For AI training where performance consistency directly impacts development cycles, dedicated infrastructure removes uncertainty and allows teams to plan training timelines with confidence.

The ROI Case for GPU Dedicated Servers in 2026

AI infrastructure is no longer an experimental budget line. It is a capital allocation decision.

Training time directly impacts product release cycles. Product release cycles influence revenue timing. Revenue timing affects valuation, competitive positioning, and market share. When training runs are delayed because of insufficient VRAM, network bottlenecks, or shared cloud variability, the cost is not just technical — it’s financial.

GPU dedicated servers eliminate performance variance. With fixed hardware allocation, guaranteed 10Gbps port options, and high-capacity bandwidth such as 100TB, teams can forecast training windows accurately. That predictability allows leadership to plan model iterations, deployment schedules, and product launches with confidence.

RTX 4090 dedicated servers provide efficient entry points for fine-tuning and applied AI development. A100 bare metal servers support enterprise-grade LLM training and distributed environments. Custom AI clusters allow horizontal scaling without architectural redesign.

The key advantage is control over compute, throughput and iteration speed.

In AI, iteration speed compounds. Faster experimentation produces better models. Better models improve user experience. Improved experience drives adoption. Adoption drives revenue.

That chain begins with infrastructure.

Organizations that treat AI training servers as strategic assets rather than temporary rentals gain measurable advantages in development velocity and cost stability. In 2026, that stability becomes a competitive moat.

When infrastructure performance is predictable, growth becomes predictable. And in AI-driven markets, predictability is power.

Board / Executive Takeaway

AI infrastructure is now a capital efficiency decision, not just an engineering preference. GPU dedicated servers reduce performance variance, compress model training timelines, and convert compute investment into predictable development velocity.

Organizations that control their AI training stack, through right-sized VRAM, high-throughput networking, and scalable cluster design, gain measurable speed advantages over competitors relying on shared cloud infrastructure. In 2026, infrastructure predictability directly influences product timing, revenue cadence, and enterprise valuation.

Ready to Build Your AI Training Infrastructure?

Whether you’re fine-tuning LLMs on RTX 4090 dedicated servers or deploying large-scale models on A100 bare metal servers, the architecture matters.

AI server hardware requirements depend on:

Model size and VRAM requirements

Single-node vs distributed training

Dataset throughput needs

Network bandwidth expectations

Long-term scaling plans

We help teams design the best GPU servers for AI training that balance performance, memory, PCIe throughput, NVMe speed, and 10Gbps networking, without unnecessary overspend.

From single-GPU systems to custom AI clusters with high-bandwidth (100TB) allocations, the goal is simple: predictable training performance and scalable growth.

If you’re evaluating AI training servers for 2026 and want architecture aligned to your workload (not a generic cloud template), let’s build it correctly from the start.

Speak with our infrastructure team today

877-477-9454

sa***@*********st.com

Because in AI, iteration speed compounds and the right infrastructure determines how fast you compound it.